Voice Activity Detection for Voice User Interface.

By Rudy Baraglia -

As a part of a R&D team at Linagora, I have been working on several Speech based technologies involving Voice Activity Detection (VAD) for different projects such as OpenPaaS:NG to develop an active speaker detection algorithm or within the Linto project (The open-source intelligent meeting assistant) to detect Wake-Up-Word and vocal activity.

Speech is the the most natural and fundamental mean of communication that we (humans) use everyday to exchange information. Furthermore with an average of 100 to 160 words spoken per minute this is the most efficient way to share data – far exceeding typing (~40 words per minute).

Signal & Features

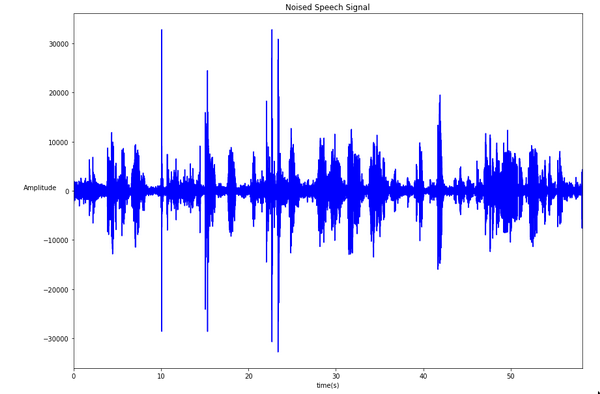

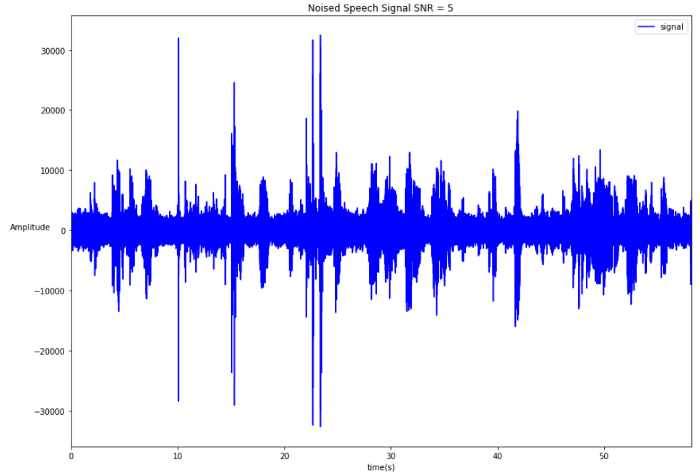

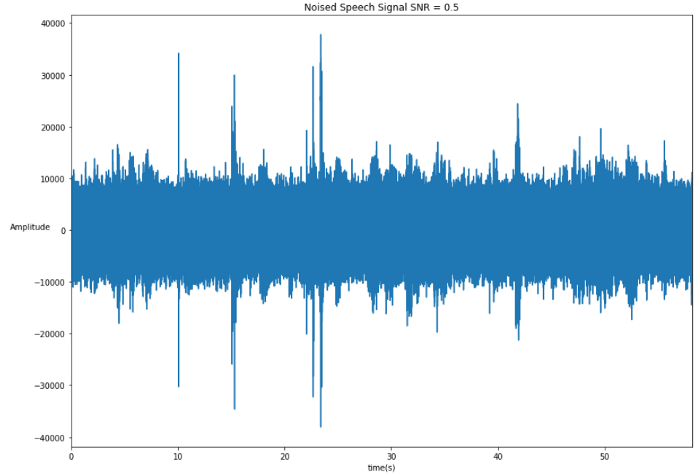

Signal to Noise Ratio.

SNR is defined as the ratio of signal power to the noise power, often expressed in decibels. A ratio higher than 1:1 (greater than 0 dB) indicates more signal than noise.

Wikipedia

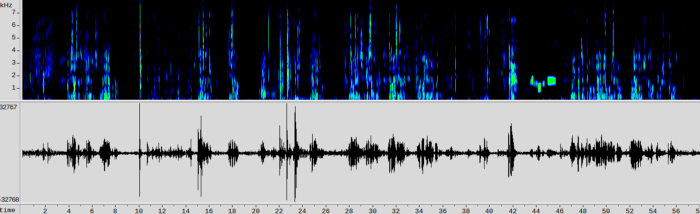

Signal Analysis

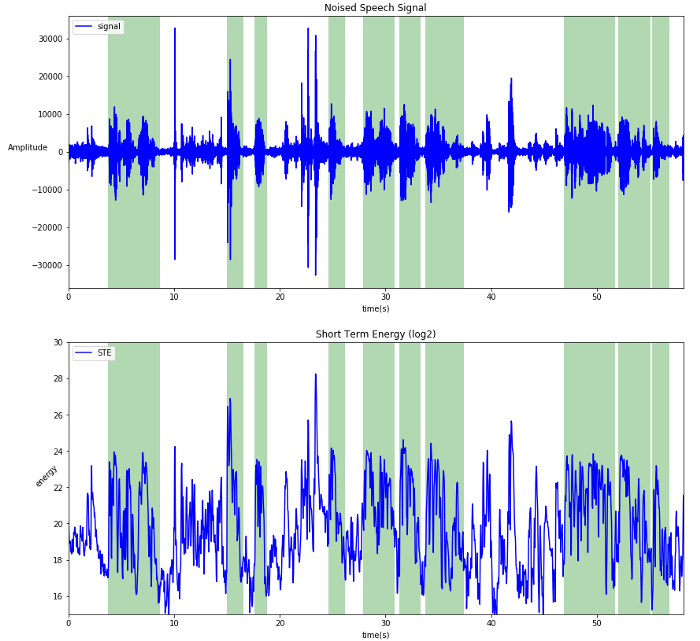

Short-Term Energy

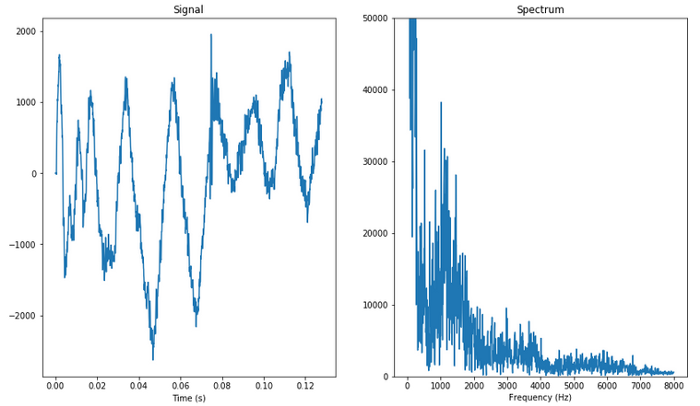

From now on we’ll be working in an other domain. The frequency domain.

Fourrier Transform

Spectrogram

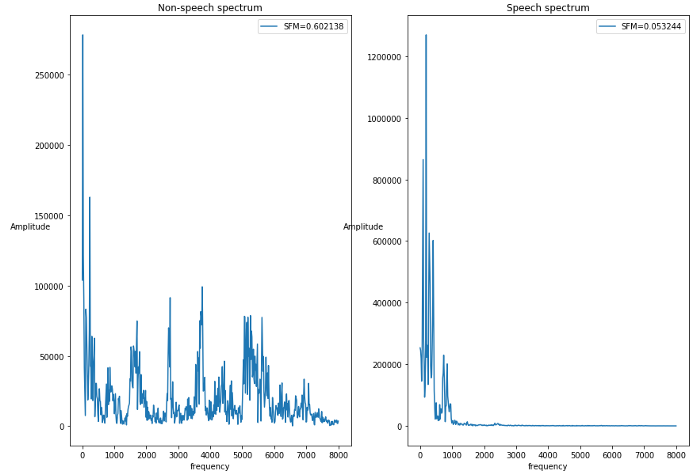

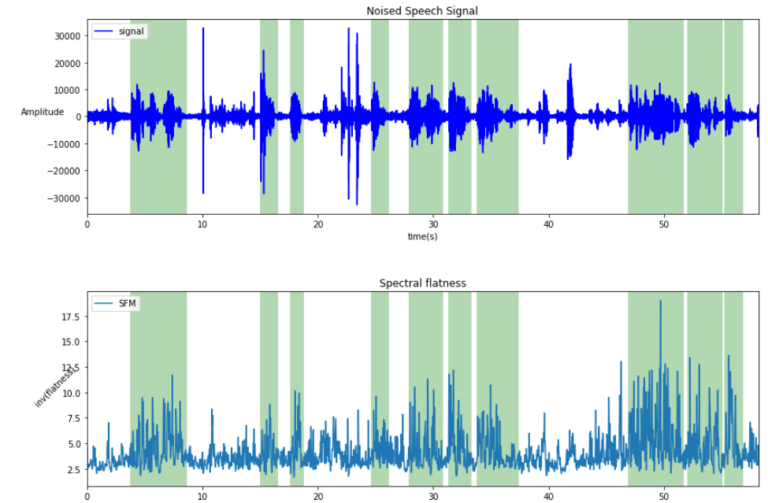

Spectral Flatness Measure (SFM)

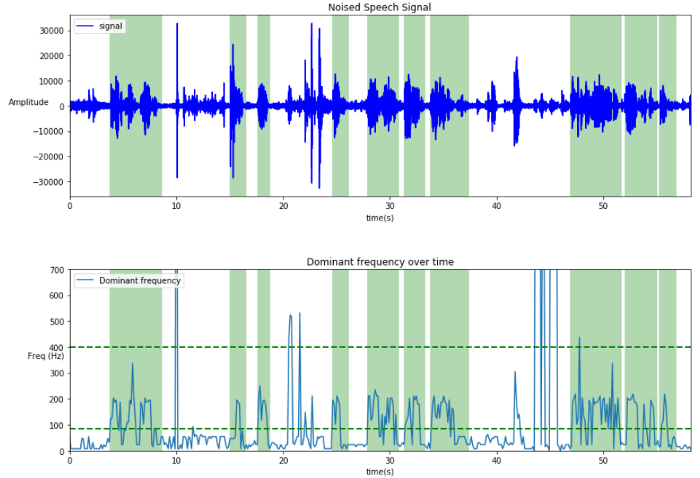

Dominant Frequency

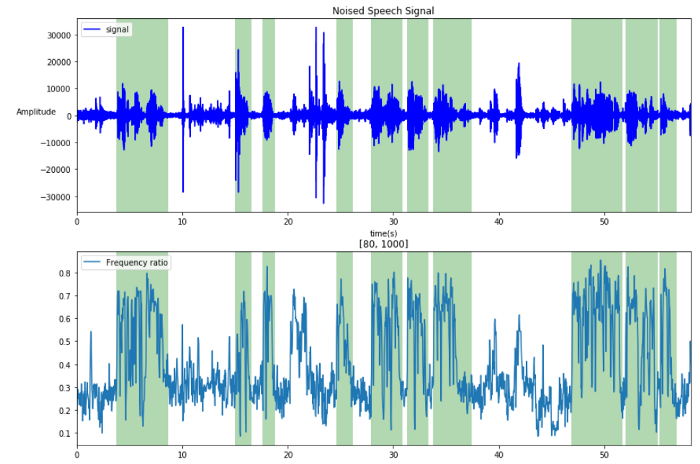

Spectrum Frequency Band Ratio

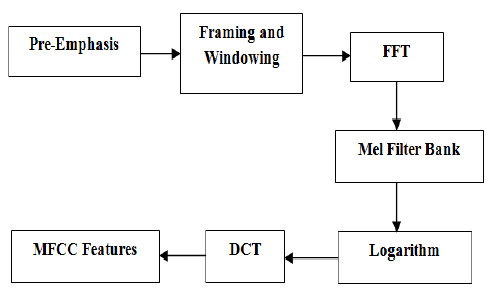

MFCC, FBANK, PLP

They return an array of values in opposition to the previous features.

Decision

Thresholds

- How to determine the threshold ?

- How to accommodate context variation ?

- How many features ?

Static threshold

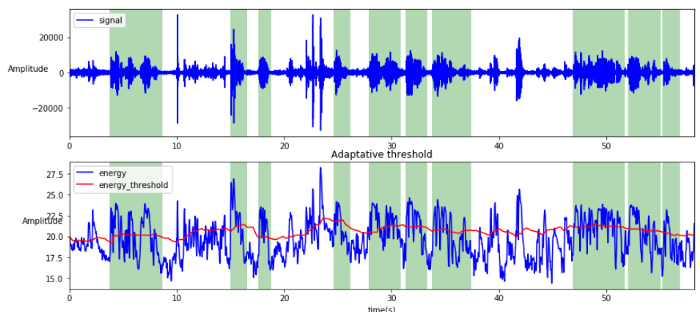

Dynamic threshold

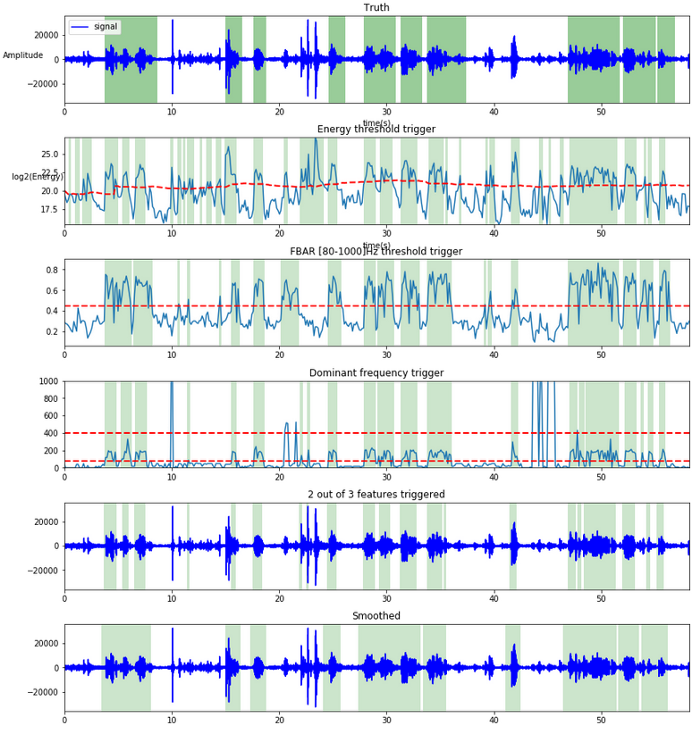

Decision rule using multiple features.

Machine Learning

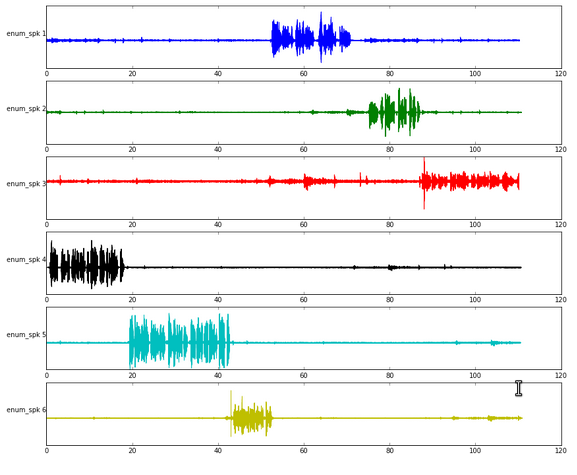

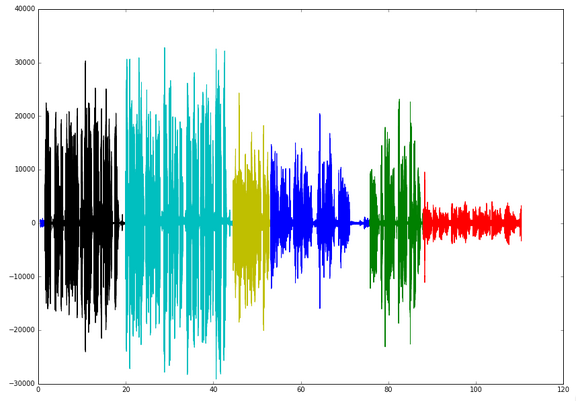

Competing Signals

Smoothing

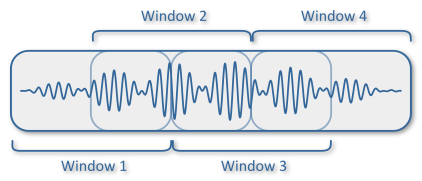

- To be considered speech there must be at least 3 consecutive windows tagged speech (192ms). It prevents short noises to be considered speech.

- To be considered silence there must be at least 3 consecutive windows tagged silence. It prevents too much cuts into speech which impact speech rhythm.

- If a window is considered speech the previous 3 windows and following 3 windows are considered speech. It prevents the loss of information at the beginning and at the end of a sentence.

Application

Here some example of our use of VAD.

Detect a single word.

Detect a sentence.

- Energy with a dynamic threshold calculated prior and during the process.

- Frequency band ratio is computed during the recording as an additional condition.

Conclusion

Accessibility

visibility_offDisable flashes

titleMark headings

settingsBackground Color

zoom_outZoom out

zoom_inZoom in

remove_circle_outlineDecrease font

add_circle_outlineIncrease font

spellcheckReadable font

brightness_highBright contrast

brightness_lowDark contrast

format_underlinedUnderline links

font_downloadMark links

Cookies allow us to personalise content and advertisements, provide social media features and analyse our traffic. We also share information about the use of our site with our social media, advertising and analytics partners, who may combine this with other information you have provided to them or that they have collected through your use of their services.

By clicking "Accept All" you consent to the use of ALL cookies.